What is an AI hair changer and why it matters? AI hair changer is a class of consumer and professional tools that use computer vision and generative AI to replace, recolor, or restyle hair in a user photo or video, enabling instant hairstyle previews without a salon visit. These tools accelerate decision-making for consumers, assist stylists in consultations, and power creative content for social media. Accuracy, safety, and speed vary by model architecture, training data, and post-processing blending techniques. This article provides technical explanation, app comparisons, privacy guidance, and actionable steps for realistic results in 2025.

What core technologies enable AI hair changers and how do they work

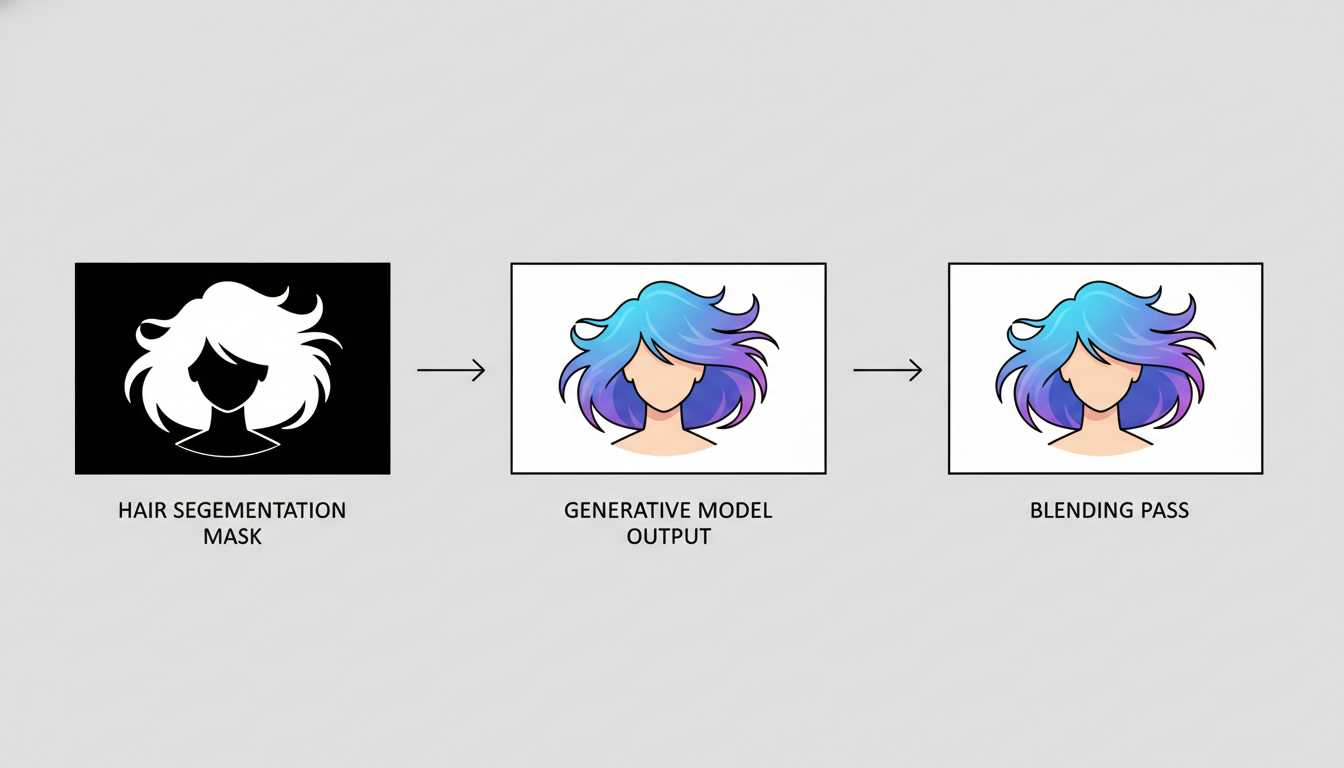

AI hair changer systems combine hair segmentation, image inpainting, and conditional generative models to produce realistic results. First, a hair/face segmentation network (often based on U-Net or HRNet variants) isolates the hair region and facial landmarks. Second, a generative model (diffusion model, GAN, or encoder–decoder transformer) synthesizes new hair shape or color conditioned on style prompts or reference images. Third, alpha blending and color matching align lighting and texture for seamless integration.

These steps map to research and engineering patterns used in modern image tools; for industry reference see the OpenAI Images guide for inpainting and conditional editing patterns. Practical pipelines often add a post-processing compositor and a color-grading pass to avoid the “pasted” look.

Which user groups benefit most from AI hair changers and why

Consumers use AI hair changers to preview cuts and vivid dye choices before committing in real life, reducing decision anxiety and salon returns. Professional stylists and salons use the tools for client consultations, portfolio augmentation, and cross-selling color or styling packages. Content creators and marketers use them to quickly produce variant assets for campaigns and A/B tests. Adoption patterns tracked across consumer apps show high engagement for color-change features and short-form video filters.

What are the accuracy limits and common failure modes in 2025

Current limits stem from dataset bias, extreme lighting, occlusions (hands, scarves), and highly textured or curly hair that challenge segmentation. Common artifacts include blurred hair edges, inconsistent highlights, and haloing where the generated hair does not match head geometry. These issues are most pronounced in low-resolution inputs or when users request dramatic geometry changes (very different length or shape).

Recent advances in high-resolution diffusion and refinement networks have reduced—but not eliminated—artifacts; users should expect reliable color changes and moderate-length style swaps, while radical structural changes may need manual retouching.

How do leading apps compare in features and trustworthiness

- Hairstyle Try On / Hair Styles and Haircuts: Focus on quick haircut previews and gender/length templates; strong UX for trial-and-error styling. (consumer-oriented)

- Hair-style.ai: Web-based free tiers (60+ styles), face-shape suggestions, geared toward no-download accessibility. (browser-first)

- HairApp: Mobile-first iOS/Android app emphasizing color analysis and fast filters; integrates social sharing. (app ecosystem)

- Hairstyle Changer (hairstyle-changer.com): Emphasizes realistic portrait blending and hairstyle catalog for both sexes. (catalog-driven)

When choosing, evaluate: upload policy, model explainability, sample outputs at high resolution, and whether the provider allows data deletion. For enterprise or salon use, prefer vendors that document training sources and provide data handling assurances.

What privacy, consent, and deepfake risks should users and businesses address

AI hair changers process biometric images (faces), so privacy and misuse risk is nontrivial. Practitioners should follow emerging risk frameworks such as the NIST AI Risk Management Framework for governance and data lifecycle controls. Key risks include unauthorized reuse of generated images, dataset provenance lacking consent, and repurposing edits for impersonation.

Mitigations: limit image retention, offer user-controlled deletion, watermark derivatives when used publicly, and implement opt-in consent flows for data collection and model improvement.

What empirical signals show growth and consumer acceptance of image-editing AI

Market and research reports indicate rapid consumer adoption of generative image features across social and photo apps. According to industry analysis on AI adoption trends, enterprises and consumer-facing products increasingly embed generative features to boost engagement and conversion rates (see McKinsey: The State of AI). Metrics to monitor include trial-to-conversion for premium filters, reduction in salon consultation time, and social share rates for AI-edited before/after content.

How to get realistic results with an AI hair changer in 7 practical steps

- Start with a high-quality, well-lit front-facing photo; avoid heavy backlighting and extreme angles.

- Choose a style that preserves head geometry (e.g., length change within 4–6 inches is safer than dramatic cuts).

- Use color-matched reference photos or the app’s color picker and adjust warmth/saturation to match skin undertones.

- Prefer apps with hair segmentation preview and adjustable hair mask to refine edges.

- Export at the highest available resolution and, if needed, apply small manual retouches (clone/heal) for hairline blending.

- When sharing publicly, consider adding a subtle watermark or label to indicate AI edit.

- Read the app’s privacy policy and delete uploaded images if you don’t want retention for model training.

Which evaluation metrics and tests should developers use to measure quality

Developers should combine objective and perceptual measures: IoU/F1 for hair segmentation, LPIPS and PSNR for reconstruction fidelity, and user A/B tests for perceived realism. Additionally, run fairness audits across demographics (hair textures, skin tones) to surface dataset bias. Continuous monitoring and user feedback loops help prioritize model fixes.

Practical guidance for businesses integrating AI hair changer features in 2025

- Define clear data governance: opt-in training data, retention limits, and deletion APIs.

- Provide transparency: disclose synthetic edits and offer an “undo” or mask-editing UI for user control.

- Offer tiered quality: mobile-friendly fast mode and a high-quality server-rendered mode for downloads.

- Monitor regulatory developments around biometric data and deepfakes; align with frameworks like NIST AI RMF.

Actionable checklist for a realistic and responsible AI hair changer workflow

- Collect diverse hair texture datasets with documented consent.

- Train segmentation + generative pipeline and evaluate on per-demographic slices.

- Implement client-side preview with server-side high-quality render option.

- Expose privacy controls and an image deletion endpoint.

- Add user education microcopy about limits and expected artifacts.

References: According to the OpenAI Images guide, conditional image editing uses inpainting and mask conditioning to produce coherent edits. For market context, see the analysis in McKinsey: The State of AI. For governance and risk practices, consult the NIST AI Risk Management Framework.