Image-to-video AI is no longer a research curiosity — it's a production tool reshaping how creators, marketers, and studios tell stories. CoopeAI.com positions itself as an all-in-one portal: text-to-image, image editing, image-to-video conversion, and article generation. This article explains what that capability actually means, when it helps (and when it doesn't), and how teams should vet tools before integrating them into workflows.

How CoopeAI.com shortens the path from a still to motion

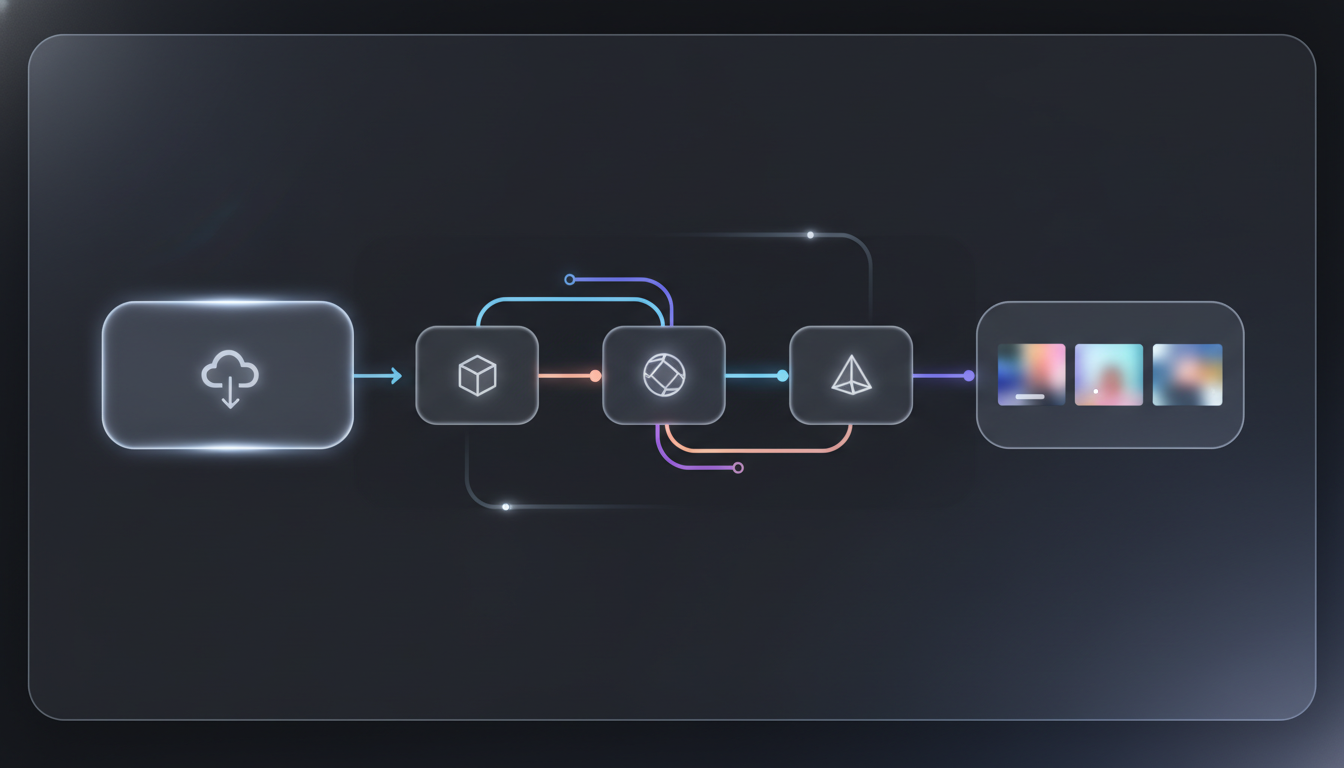

The practical value of an image-to-video pipeline is that it collapses multiple production steps into one iterate-friendly loop. Instead of: concept art → storyboard → 3D assets → animation → render, tools like CoopeAI.com let you start from a photo or generated image and produce short animated clips rapidly. That speed is useful in three clear scenarios:

- Rapid prototyping of visual ideas for ads and social content.

- Bringing product photos to life for e-commerce (spins, short demos, contextualized motion).

- Creating ambient or illustrative content for editorial and social distribution where photorealism is not required.

Those workflows are where time-to-output beats absolute fidelity. Enterprise teams should ask: do we need broadcast-quality frames, or do we need fast, iterative visual concepts? The latter is where image-to-video AI shines.

The technical trade-offs behind the magic

Image-to-video generation relies on diffusion and latent-space dynamics to add temporal coherence. Recent academic systems such as Google’s Imagen Video and early efforts like Make-A-Video demonstrate key principles: strong single-frame quality, plus learned motion priors, produce plausible short clips. See the Imagen Video paper for the underlying approach: Imagen Video paper. Another relevant study is Meta’s early Make-A-Video work: Make-A-Video paper.

Important constraints to keep in mind:

- Temporal consistency vs. creativity: keeping object identity steady across frames is hard; many models introduce subtle drifting artifacts.

- Resolution and length: most consumer-oriented pipelines produce short clips (2–10s) at HD or lower; high-resolution, long-form video still requires conventional VFX pipelines.

- Control granularity: image conditioning helps retain details, but fine control over camera motion, occlusion handling, and physics remains limited.

- Compute and latency: faster iterations require optimized inference; web services often balance cost by offering lower-res previews and paid high-res renders.

Understanding these trade-offs helps you pick the right use case: concept and social clips vs. feature-grade production.

Business models and value capture for platforms like CoopeAI.com

Platforms that combine generation, editing, and article production create cross-sell opportunities:

- Freemium for discovery, paid tiers for higher resolution, longer clips, or commercial licenses.

- API access and white-labeling for agencies and publishers who need volume integration.

- Asset marketplaces where creators sell prompts, presets, and generated clips.

For enterprises, the ROI calculation typically hinges on speed, cost per approved creative, and licensing clarity. If CoopeAI.com can reliably reduce the creative cycle from days to hours for certain asset classes, it becomes defensible as a utility rather than a novelty.

Ethical, legal, and brand-safety considerations

Image-to-video tools surface a mix of legal and reputational risks:

- Copyright and likeness: transforming a copyrighted photo into motion may still implicate rights. Ensure licensing terms are explicit.

- Deepfake risks: these systems can be misused to create deceptive likenesses; platform-level safeguards and watermarking are important.

- Attribution and provenance: content pipelines should record prompt and model metadata to support audits and takedown flows.

Teams must demand clear Terms of Service and enterprise controls (whitelists, banned content filters, and provenance logs) before adoption.

Practical checklist for evaluating an image-to-video provider

- Output fidelity and artifacts: test with your sample assets across multiple scenes.

- Length and resolution limits: confirm export formats and commercial-use licensing.

- Editing controls: can you refine motion, retarget camera paths, or re-run with subtle prompt edits?

- Integration options: does the provider offer an API, batch processing, or SSO for teams?

- Auditability: are prompts, model versions, and usage logs available for compliance?

Where this technology heads next

Expect three converging trends over the next 12–24 months:

- Better long-term coherence as models learn stronger temporal priors and scene decomposition.

- Hybrid pipelines that combine AI-generated motion with lightweight 3D proxies for physically plausible camera movement.

- Industry-specific verticals (fashion try-ons, product demos, architectural flythroughs) with tailored prompts and templates that improve out-of-the-box results.

For teams evaluating CoopeAI.com, treat it as a strategic accelerator for low-to-mid fidelity visual production. For high-stakes, high-fidelity needs, plan for hybrid workflows that marry AI speed with human-led finishing.

References:

- Imagen Video paper: Imagen Video paper

- Make-A-Video paper: Make-A-Video paper